While NSX is an awesome product, there are 3 things that I haven't liked, from the bare beginning of the product:

- Management: You can only manage NSX from your vSphere Web Client. Not great UX, a Web Client Plugin that VMware never really got a decent level… No wonder most of VMware admins prefer the vSphere Thick client (not an option for Network and Security admins).

- NSX only supports ESXi as a hypervisor. It's the best one, I'll admit, but todays Cloud requires MORE flexibility, not less…

- No way of managing the Physical Network (Underlay). Regardless of the Overlay Networking philosophy, and the fact that NSX would work perfectly over most low latency high throughput Data Center Fabrics, I've seen cases where this was a deal breaker.

I'm happy to say that VMware actually addressed 2 of my 3 complains with NSX-T:

- You can now manage NSX using a separate GUI interface, all HTML5!!! Finally!

- NSX-T supports KVM, and will potentially support bunch of other integrations… and yeah, CONTAINERS!!!

No wonder I wanted to try it as soon as it was available for download. So I did… and wow, was that a pleasant surprise!!! The installation process is just so clean and nice, I was truly impressed. In this post I'll just cover the Manager and Control Cluster part, and in my next post I'll proceed with the Hypervisor integration and the Data Plane.

Before I proceed with the deep technical stuff, here are a few facts to help you fall in love with NSX-T:

- It's unofficially called "Transformers" because different releases have different Transformers names.

- The NSX license is unique. This means that you can buy the license, and use it in NSX-V (vSphere integrated version) or NSX-T.

- It supports Containers!!! Yes, I'm aware I always end this with 3 exclamations.

- NSX-T is too cool for VxLAN, so it's using a new, and cooler GENEVE* protocol.

*VXLAN and NVGRE headers both include a 24-bit field. STT specifies a 64-bit field. None requires modifying or replacing existing switches and routers, although some equipment vendors have developed hardware assists to increase the efficiency of one or more of the solutions. Now a new network virtualization standard has emerged -- Generic Network Virtualization Encapsulation (GENEVE) -- that promises to address the perceived limitations of the earlier specifications and support all of the capabilities of VXLAN, NVGRE and STT. Also… very few actually use the "basic" VXLAN implementation, most vendors, like Cisco and VMware use the "on steroid" improved versions, which makes them mutually non compatible. Many believe GENEVE could eventually replace these earlier formats entirely. More details here.

NSX-T Installation

Step 1: Install a NSX Manager and 3 NSX Controllers as OVA files to vCenter or KVM. You will need 4 mutually routed IP addresses.

If you need instructions on how to deploy an OVA in your vCenter, you're at the wrong blog :)Step 2: SSH into NSX Manager and all of the Controllers, and do a manual Joining process:

NSX Manager

NSX CLI (Manager 2.1.0.0.0.7395503). Press ? for command list or enter: helpnsx-t-manager>

nsx-t-manager> get certificate api thumbprint

3619a99a934ddacf06792ea0cf0566c1d49223ac5117a73d95c31e5c482ef868

nsx-t-manager>

Controllers

(repeat this on all 3 controllers, regardless that you dont have the Control Cluster built):NSX CLI (Controller 2.1.0.0.0.7395493). Press ? for command list or enter: help

nsx-t-controller-1>

nsx-t-controller-1>

nsx-t-controller-1> join management-plane NSX-Manager username admin thumbprint 3619a99a934ddacf06792ea0cf0566c1d49223ac5117a73d95c31e5c482ef868

% Invalid value for argument

nsx-t-controller-1> join management-plane 10.20.41.120:443 username admin thumbprint 3619a99a934ddacf06792ea0cf0566c1d49223ac5117a73d95c31e5c482ef868

Password for API user:

Node successfully registered and controller restarted

nsx-t-controller-1>

nsx-t-controller-1> get managers

- 10.20.41.120 Connected

nsx-t-controller-1>

Verify on the NSX Manager:

nsx-t-manager> get management-cluster status

Number of nodes in management cluster: 1

- 10.20.41.120 (UUID 4207150A-9BB0-6283-181E-AAB3924321BF) Online

Management cluster status: STABLE

Number of nodes in control cluster: 1

- 10.20.41.121 (UUID cf4e1847-508e-4a73-96df-c2311f96a55b)

Control cluster status: UNSTABLE

Now other 2 Controllers:

nsx-t-controller-2> join management-plane 10.20.41.120:443 username admin thumbprint 3619a99a934ddacf06792ea0cf0566c1d49223ac5117a73d95c31e5c482ef868Password for API user:

Node successfully registered and controller restarted

nsx-t-controller-3> join management-plane 10.20.41.120:443 username admin thumbprint 3619a99a934ddacf06792ea0cf0566c1d49223ac5117a73d95c31e5c482ef868

Password for API user:

Node successfully registered and controller restarted

nsx-t-manager> get management-cluster status

Number of nodes in management cluster: 1

- 10.20.41.120 (UUID 4207150A-9BB0-6283-181E-AAB3924321BF) Online

Management cluster status: STABLE

Number of nodes in control cluster: 3

- 10.20.41.121 (UUID cf4e1847-508e-4a73-96df-c2311f96a55b)

- 10.20.41.122 (UUID 4517abc2-5244-4327-a3c8-739ac18b0fd7)

- 10.20.41.123 (UUID 22ab7f0a-02a4-42b0-93b0-f8cce56c4200)

Control cluster status: UNSTABLE

Note that the Control Cluster is still UNSTABLE. Let´s fix this…

Step 3: Build the control cluster:

After installing the first NSX Controller in your NSX-T deployment, you can initialise the control cluster. Initialising the control cluster is required even if you are setting up a small proof-of-concept environment with only one controller node. If you do not initialise the control cluster, the controller is not able to communicate with the hypervisor hosts.Lets first make a Controller Cluster, while we only have 1 controller:

set control-cluster security-model shared-secret

nsx-t-controller-1>

nsx-t-controller-1> set control-cluster security-model shared-secret

Secret:

Security secret successfully set on the node.

nsx-t-controller-1> initialize control-cluster

Control cluster initialization successful.

nsx-t-controller-1> get control-cluster status verbose

NSX Controller Status:

uuid: cf4e1847-508e-4a73-96df-c2311f96a55b

is master: false

in majority: false

This node has not yet joined the cluster.

Cluster Management Server Status:

uuid rpc address rpc port global id vpn address status

f0c3fc47-b07c-4db2-9b9d-f4df7ad2aa62 10.20.41.121 7777 1 169.254.1.1 connected

Zookeeper Ensemble Status:

Zookeeper Server IP: 169.254.1.1, reachable, ok

Zookeeper version: 3.5.1-alpha--1, built on 12/16/2017 13:13 GMT

Latency min/avg/max: 0/0/14

Received: 212

Sent: 228

Connections: 2

Outstanding: 0

Zxid: 0x10000001d

Mode: leader

Node count: 23

Connections: /169.254.1.1:40606[1](queued=0,recved=204,sent=221,sid=0x1000034b54f0001,lop=GETD,est=1514475848954,to=40000,lcxid=0xc9,lzxid=0x10000001d,lresp=3485930,llat=0,minlat=0,avglat=0,maxlat=8)

/169.254.1.1:40652[0](queued=0,recved=1,sent=0)

nsx-t-controller-1>

To make this "STABLE", create a control CLUSTER, and associate the other Controllers to the NSX Managers. Once you do:

nsx-t-controller-2> set control-cluster security-model shared-secret secret SHARED_SECRET_YOU_SET_ON_CONTROLLER_1

Security secret successfully set on the node.

nsx-t-controller-2> get control-cluster certificate thumbprint

ef25c0c7195907387874ff83c0ce0b1775c9a190c2b27c82f9ad1db3da279c3d

Once you have those, go to the MASTER Controller, and join the other Controllers to the Cluster:

nsx-t-controller-1>

nsx-t-controller-1> join control-cluster 10.20.41.122 thumbprint ef25c0c7195907387874ff83c0ce0b1775c9a190c2b27c82f9ad1db3da279c3d

Node 10.20.41.122 has successfully joined the control cluster. Please run 'activate control-cluster' command on the new node.

nsx-t-controller-1> join control-cluster 10.20.41.123 thumbprint f192c6e8f33b5c9566db6d5bcd5a305ca7ab2805c36b2bd940e1866e43039274

Node 10.20.41.123 has successfully joined the control cluster. Please run 'activate control-cluster' command on the new node.

nsx-t-controller-1>

Now go back to the controllers 2 and 3, and activate the cluster:

nsx-t-controller-2> activate control-cluster

Control cluster activation successful.

nsx-t-controller-1> get control-cluster status

uuid: cf4e1847-508e-4a73-96df-c2311f96a55b

is master: false

in majority: true

uuid address status

cf4e1847-508e-4a73-96df-c2311f96a55b 10.20.41.121 active

4517abc2-5244-4327-a3c8-739ac18b0fd7 10.20.41.122 active

22ab7f0a-02a4-42b0-93b0-f8cce56c4200 10.20.41.123 active

nsx-t-controller-1>

nsx-t-manager> get management-cluster status

Number of nodes in management cluster: 1

- 10.20.41.120 (UUID 4207150A-9BB0-6283-181E-AAB3924321BF) Online

Management cluster status: STABLE

Number of nodes in control cluster: 3

- 10.20.41.121 (UUID cf4e1847-508e-4a73-96df-c2311f96a55b)

- 10.20.41.122 (UUID 4517abc2-5244-4327-a3c8-739ac18b0fd7)

- 10.20.41.123 (UUID 22ab7f0a-02a4-42b0-93b0-f8cce56c4200)

Control cluster status: STABLE

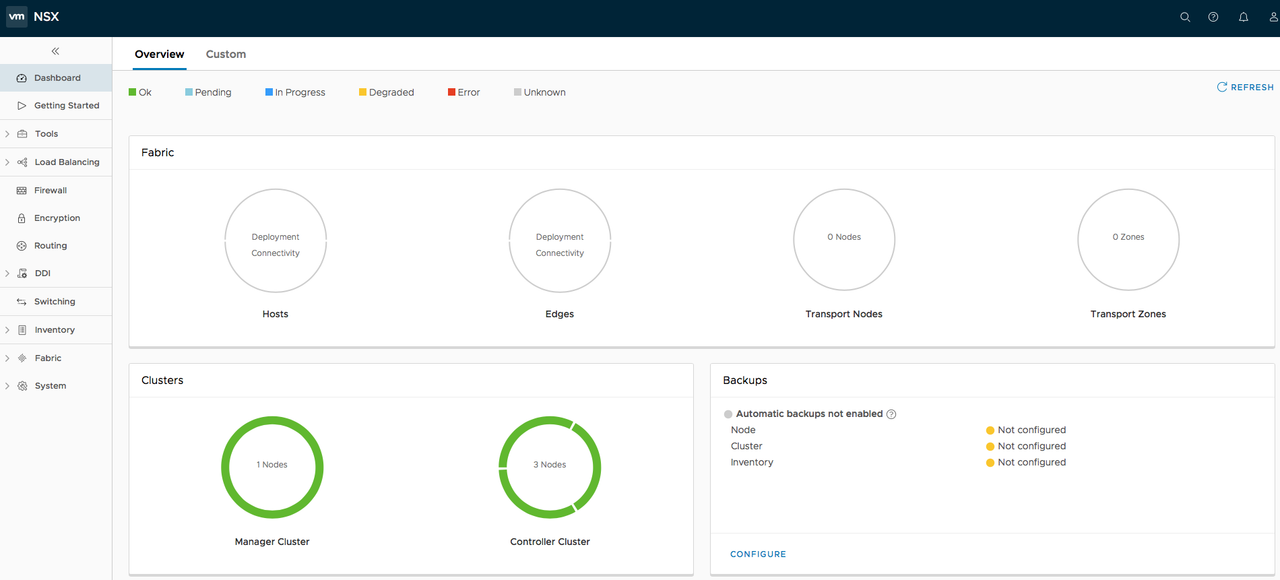

Let's now check out the GUI (you'll LOVE the NSX-T graphical interface btw):

That's it!!! Now let's go integrate it with the Hypervisors...